Jack Murray - BlogPractical product discovery for growth at scale ups (a long, e2e guide)9253 words (approx 47 min)

Good discovery is what separates world-class teams from average teams.

It’s how teams uncover insights and ideas that really move the needle.

It’s how teams build more trust with their leadership, granting them more and more autonomy.

And it’s how amazing shit gets shipped.

I define discovery as simply the phase of generating insight and setting direction.

This guide will address two of the most common problems team leads experience:

- How do I come up with great ideas? (not the same old ‘gamification’ or ‘remove X page’ ones)

- How do I pick the ideas to actually test? (not just using ‘RICE’ 🤮).

These are product discovery questions.

If you’re a product manager, designer, engineering, or marketing lead in a growth team and have thought these questions - this guide should be very valuable for you.

In my time as a product person I’ve launched hundreds of tests and experiments, failed and learned and been humbled many times, and generated tens of millions of incremental annual revenue doing so.

And I’m going to openly share what I’ve found works for product discovery in growth contexts, and what doesn’t.

Some symptoms that you’re doing growth discovery wrong are:

- You feel like getting a new goal sends you back all the way to a blank slate

- You don’t know where to start to fish for insights and idea to be testing, or struggle to generate new ideas

- The same kinds of insights and ideas keep coming up whenever you or your team are coming up with test ideas - you feel a bit of deja vu

- You’re frustrated with the lack of interesting ideas that are surfacing to work on

- You don’t have a continuous flow of ideas to develop

If these feel familiar - this guide will be a painkiller for you.

Admittedly, it's lengthy and quite rambly - more than I planned when I set out. Regardless, hopefully it's valuable for you!

Feel free to reach out to me on Twitter or by email if you have any questions, or would like to chat about your specifics - I'm open to advising and mentoring if it's a good fit.

Who is this useful for?

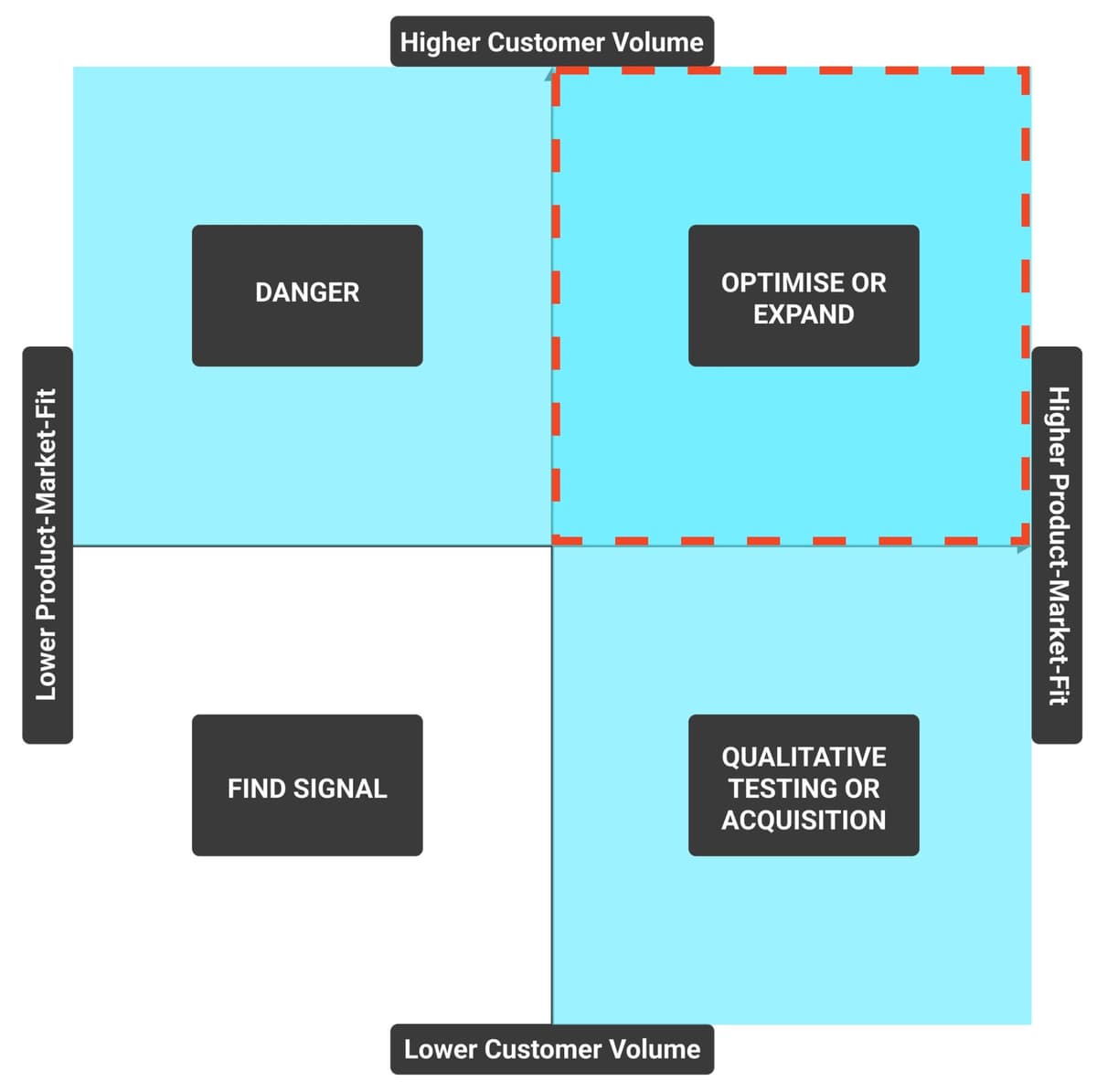

This guide is mainly for products that find themselves in the Optimise or Expand quadrant of what I call the “Growth Stage Matrix”. The majority of product managers, product designers, and tech and marketing leads of teams in established companies will find themselves here.

You might not be explicitly called a ‘growth’ team (you might be called a ‘core’ team or something else). But if you’re working towards an acquisition, engagement (activation, retention, etc) or monetisation goal on a product that already has decent product market fit and some scale, you’re most likely in this bucket.

In case this is confusing, big candidates that fit this Optimise or Expand quadrant are:

- Consumer scale-ups (e.g. Skyscanner, ClearScore, Monzo, etc)

- B2B scale-ups that have high customer volumes and usually self-serve (e.g. Stripe, Amplitude, Notion, etc)

- Mature products and companies (e.g. Booking.com, Facebook, Asos, etc)

- Teams working on product ‘spaces’ within a big product that are now core and have high customer volumes using it (e.g. Instagram ‘Reels’, LinkedIn ‘Jobs’, etc)

My experience has largely been in these two areas of at different times in my career. In this top right quadrant, teams are often either Optimising or Expanding.

Optimising means you’re trying to grow the acquisition, engagement or monetisation of the business by connecting customers with existing value. In other words, taking a product or product space that is already in the top right and pushing it further upwards and to the right. It is not synonymous with ‘conversion rate optimisation’ or incrementalism, but these are included within it.

For example, figuring out how to convert customers better through a marketplace, how to convert site visitors into valuable customers, and iteration on a current feature set to better meet the satisfaction of users.

Expanding means you’re trying to grow acquisition, engagement or monetisation of the business by extending the value proposition into new or adjacent spaces. In other words, launching new things that will start in the bottom left of this diagram and then you will need to try and push them up to the top right (or discontinue them). This is what many people (wrongly, and I’m guilty of it too) call a ‘zero to one’ product inside a larger company. The reality is it’s ‘new’ but not really from zero, you’ve got leverage you can use to increase your chances of success.

For example, a credit marketplace extending into savings accounts, a flights search engine extending into hotels, or entering the product into a new market.

In agreement between your team and your leadership, you may explicitly choose one of these two (Optimise or Expand), or it might be left open. It depends a lot on the problem you’re trying to solve and the context of your product and company.

Let’s jump in…

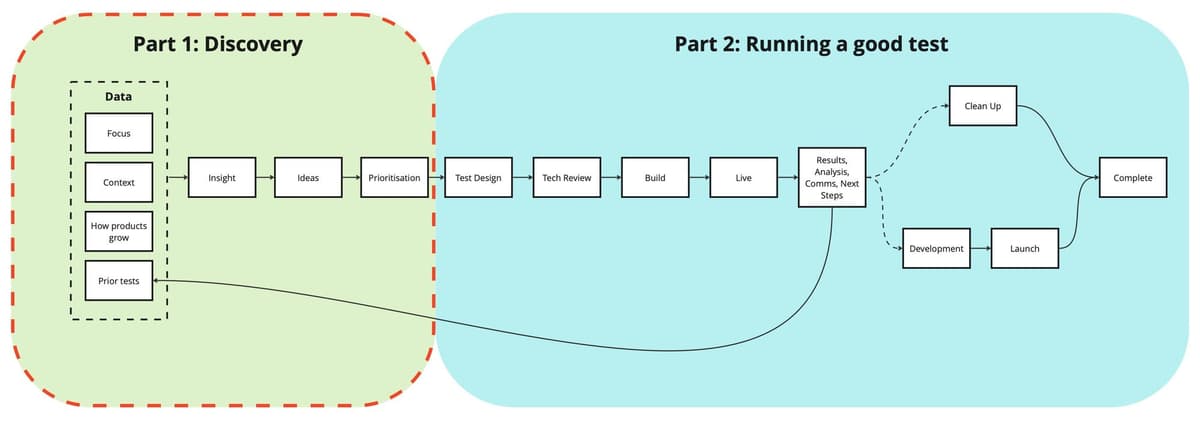

I found when writing this that there’s a logical split between “discovery” and the “running of good tests”. I’ll write a guide on running good tests if people are after my opinions on that, but this is in many cases well covered elsewhere. Also, if you have crappy idea generation skills, the running of good tests will only take you so far.

To reiterate and get a bit more specific, discovery in this context is simply the phase of collating and filtering down large amounts of information into relevant insights, specific ideas, and deciding on the course of action.

The best teams do discovery continuously.

I’m going to show you how to:

- Build a healthy continuous discovery lake

- Go fishing for insights in your discovery lake

- Generate large volumes of potentially great ideas

- Choose which ideas to prioritise and which to park for ‘maybe later, maybe never’

I bet most of us can relate to sitting in or running ideation workshops with your team where generic or unimaginative ideas like “gamification” or “remove X page” or some random tech feature seemingly pop up out of the ether.

That ends with this guide.

A quick note: this guide is not intended to be used as “process”. I bias against process. I don’t follow this rigidly, I follow it fluidly. This is intended to be a framework and not a sequence of events that must happen in this order. It’s principles-based, but I've tried to make it clear enough in order to be actually practical.

Let’s start at the start - making a discovery lake.

Making a ‘Discovery Lake’

I call the collated data I go fishing in for insights and ideas my ‘discovery lake’.

Literally I imagine a big lake, with new water coming into it from different sources.

Even if you don’t keep an intentional discovery lake, you’re still keeping one - it’s just in you and your teams’ heads unsystematically and chaotically. It’s where you came up with your last idea for a test to run by copying something you saw someone mention in a LinkedIn post or heard someone did on a podcast.

An unintentional discovery lake is riddled with biases - especially recency bias.

Let’s just say you’ll be quite inconsistent without one.

For a long time I had an unsystematic discovery lake. But I’ve learned that building an intentional one is an accelerant to amazing ideas and especially breakthrough ones.

Especially in growth contexts, I imagine 3 main sources of water flowing to it to build a healthy, continuously flowing discovery lake.

These sources are what I call:

- How products grow - Understanding of how products, features, and companies grow, and how they’re growing today.

- My context - the deepest of the rabbit holes specifically related to your situation, encapsulating your customer, product, business, market and industry, wider context and current and evolving growth model

- Prior tests - learnings and ideas and insights generated from your prior tests, experiments and launches

If you have a good discovery lake, then you can go fishing for some amazing insights and ideas.

The quality of your insights and ideas will be limited to the health of your discovery lake.

So it’s very important you get it right.

It’s like having a secret fishing spot.

If you’re panicking reading this and realising you don’t have one - don’t worry.

I’ll walk you through the key parts and with some ‘starter’ information to spawn your own discovery lake quite quickly, and you’ll be off to the races.

What is it practically though?

I’ll be honest - it’s nothing fancy.

It’s just a folder.

But it’s an extremely helpful one.

I’ve had it on my computer before, but for the last year or so I’ve stored it in Notion. Notion isn’t the perfect solution, but it does have some nifty features that help.

In it, I store and “steal” anything interesting that someone else produced around the business (e.g. our user insight team, some test or learning that another team did, or so on), as well as things I read online, heard in a podcast, produce myself, and so on.

It is my centre of information, insights, data, interesting business context and so on. A lot of it is partially organised, a lot of it is disorganised. But that’s okay. A lake swirls and moves around with a kind of current, and that’s the kind of organic disorganisation we need for creativity sometimes.

The point of the file is to let information flow into it from the different sources.

Later, you can go fishing in it when you need to. Maybe you’ll get a bite, catching some intriguing insight that leads to something worth testing.

This isn’t the file to be incredibly rigid and organised and refined on what you add to it. Actually, rigidity and organisation of this file will limit your creativity.

The primary purpose of this file is not to be the company discovery lake.

It’s your discovery lake.

The idea is that it is somewhere you can go to when you don’t know where to go. Again, think of it as your secret fishing spot, where there’s a higher chance of catching good fish.

To get you started, simply make a Notion or Google Drive folder with the following numbered sections.

- How products grow

- My context

- Prior tests

I like to add a focus document at the top level as well. This is so I know when I come back to the discovery lake in future what the context of this information is falling within.

With these folders, you literally just dump stuff in as you see it.

It’s a habit you’ve got to practice and build.

Write your own stuff and shove it in here, link stuff, PDF stuff you find online, draw stuff, and so on. Unstructured is fine.

If you want to get real smart, you can also do automations. For example, I had a Notion Database in this file that pulled in information from others’ completed tests. I’ve also used various Notion extensions like Web Clipper to quickly save things I see online to my discovery lake. Be careful with automations though, as intentionally putting things into it is most valuable. Sorry, you can’t automate reading and actually understanding something until Neuralink puts chips in our brains.

The other big benefit of your discovery lake is that when you move on to a different focus, a lot of it can be lifted and shifted. A good chunk of information about your context and a good chunk about how other companies or products have grown can be stolen from yourself. It means by your third or fourth focus area at a company, you’re building up a more and more refined and clear view of these things too.

The discovery lake is not for defining specific ideas or direction or solutions per se. You can definitely dump this sort of stuff in there, but it’s not its purpose. Refinement comes later. Build a decently sized and healthy lake before you go fishing.

Design double diamond fans, funnily enough this is the first half of your first diamond. Diverge before doing any converging. The beauty of having a healthy continuous discovery lake is that you’ve always got this first diamond’s divergence going.

I find that without a healthy discovery lake, this part is the most time consuming part of the whole discovery process. I’ll bet that a lot of designers can relate.

By having a healthy continuous discovery lake, you’ve literally given yourself a massive head start. People will question how you moved so quickly when you pick up some new space.

Without it you’re effectively hiking over to others’ ponds or lakes to go fishing, not particularly sure if there’s even fish to begin with - and you don’t know how healthy the information is.

You’re relying on others’ curated hubs of information or scattered and fragmented reports or insights.

You’re also then putting a huge burden on your memory to remember all these different spots later.

You’re riddled with biases - a discovery lake gives you a bird’s eye view over everything.

The important part is that it’s your discovery lake. It’s your ‘second brain’ in a way.

This is why I can’t lift and shift my discovery lake and just give it to you. That’d not be nearly as useful or relevant, and it’d be information overload.

You need to evolve your own over time and grow it organically.

As you can probably guess, it’s also not something that is a ‘one and done’ activity to build a discovery lake. You have to feed it with more water and resources over time. Cutting it back sometimes is fine too, but it’s not as required as you’d think. But let’s just say that if you leave a large body of water without any flow, it’ll stagnate and go bad. Time-relevant information, new findings and new information is necessary for its health. This is the essence of continuous discovery.

Equally, feed it with complete garbage and that will slowly poison the whole thing. Some garbage going into it will be fine, but when the garbage hits a critical mass, you’ll recognise yourself that it’s a bit less of a useful place to go fishing for insights and ideas. At that point, sometimes it’s time to start up a new one and take what is valuable and useful and switch it to a new discovery lake.

Get into the habit of seeing or reading something, and taking the extra few seconds to add it to your lake. Future you will thank you for doing it. I find it relieves me of a lot of stress to try and remember things until I can actually use them.

If you’re a product manager, getting into this habit (or one like it) is a core skill. It’s one that separates a product manager from a project manager or delivery manager or scrum master or whatever other title is cooking these days.

If you are aren’t building up ridiculous amounts of context in your problem space from all these angles, then you’re not doing a product manager role.

Alright, now we know how you’ll actually store this information, let’s take a look at each of the sources of information you want to flow into your discovery lake.

1. How products grow

This one is the most transferable when you move onto a new product or company or focus, so it’s a great one to forever train up on and build good habits.

In this section you want to capture everything you learn about how products (and companies) grow, and especially real world examples of how others have grown. It’s kind of as simple as that.

The hardest part is remembering to add things to this section of your discovery lake.

Here’s a bit of a starter seed for your “How products grow” section. Add them to yours only if you’ve actually read these though of course.

- Books

- Inspired by Marty Cagan

- Hacking Growth by Sean Ellis

- Alchemy by Rory Sutherland

- Thinking Fast and Slow - Daniel Kahneman and Amos Traversky

- The Lean Startup by Eric Ries

- $100M Offers Alex Hormozi

- Podcasts

- Lenny’s Podcast

- My First Million (the name is naff but it’s not really about that at all)

- The Breakout Growth Podcast - Sean Ellis and Ethan Garr

- Acquired

- On Brand… with ALF and Rory Sutherland

- Leah’s Product Tea

- Articles / Resources

- Competitor and adjacent case studies and examples

2. Your Context

This is the most meaty part of your discovery lake by far, and it’s the most dependent on your specific circumstances.

You need this to be the most quantity filled section. You also want to try and validate quality of this stuff as it feeds into your discovery lake. Not too much, but don’t just chuck garbage in here.

The topics you want to cover in here start from user-first and extend to the industry you’re in at large. So, as a non-exhaustive list of ideas of things, but what I’ve found as a helpful categorisation of information in here:

- Customers / users The who, what, when, where, why, and how they think about the space you’re in and how they use your product, what goals and jobs to be done they have, etc. Get close to them. Actually talk to them. ‘Mom test’ interview them. Analyse them in your analytics. This is often the most valuable section when paired with what we’ll get into later on for coming up with actual tests you can run.

- Your product This is where you need deep understanding of your product, the core value proposition, the analytics through your product (e.g. funnel), the drop off points, the analytics you have and don’t, the technical limitations, the things that are easy and difficult to change, the navigation, the UX journeys your customers can go down, the unhappy paths, etc. As much as you can learn about the actual thing the customers use.

- The business You need to deeply understand the business. The business model (ways the company makes money), the vision and strategy the company has and your place within it, the dynamics of decision making, the key players and stakeholders you need to influence internally, the legal requirements, etc.

- Market & industry This is the world your product operates in, major players and the newcomers, the competitors and adjacent, trends that are catching on, regulatory changes and proposals, international dynamics of the same industry, etc. You won’t be using your competitors for direction on exactly what you should build, but you’ll damn well be using them for context and ideas.

- Wider Context Anything that might materially impact your customers, partners or your own company or product or feature’s current circumstances. This might include the cost of living in certain countries you operate in (or maybe your suppliers do), changes to regulation, wider economic circumstances and trends, etc.

I get it, that feels a bit overwhelming if you’re starting your discovery lake completely fresh. Where the hell should you start?

Start with trawling through your companies’ current insights and learnings and user research files. Anything interesting, link it or duplicate it or summarise it manually or really quickly with AI tools like ChatGPT into your discovery lake. Reminder again - this isn’t some systematic perfectly organised thing. That’ll often hamper how you connect ideas together in your brain.

Once you’ve collected what already exists, you can evolve and add to it more organically from there. Someone shared some cool growth idea on Linkedin? Swiped. Someone shared an article about new regulations coming into your industry? Swiped. Someone did a funnel analysis into your product? Swiped. Someone did some user interviews about how they think about your product? Swiped.

Again - this isn’t for being an organised, systematic place for storing information. This is a swirling, flowing lake of information.

Then you can get generating your own insight. User interview habits, looking into the quant and qual data available, understanding product usage, asking stakeholders to give a bit of a download from their brain about things (e.g. commercial managers about their partners), etc.

You need to be good at diving into the data yourself. If you’re equipped with Amplitude or some other self-serve analytics tool, it’s well worth the time investment to learn it and get very familiar with the events and ways to cut the data to find interesting things. If that means learning SQL or something else at your company, you need to take the challenge on in stride. No one said this would be easy.

I store interesting findings in dashboards and link those dashboards in my discovery lake under Product or Customer sections, depending on what they’re looking at. This becomes the evidence that will mount and convince others of your insights and hypotheses.

3. Your prior tests

The secret sauce of an amazing growth workflow is this iterative learning loop. With every test you launch, you win and/or you learn.

Technically even in ‘non-growth’ contexts you want a great systematic feedback loop like this, but in growth contexts it is a little more tangible.

Simply store relevant learnings from tests run at your company in here.

Anything you find interesting and relevant from tests you have previously run or others have.

I’m not just talking A/B experiments here, but also other things launched. That might be a new feature launch or the learnings from an email campaign or from brand insight or from some change the tech team made that revealed opportunity.

Maybe someone in a company meeting presented something they’re proud of that’s had impact. Dig into it a bit after the presentation and get a bit more nuance and capture it in your discovery lake.

This is also why a high test velocity is fundamental to the success of doing growth work, and of most companies in general. The higher the velocity of valuable tests being launched and learned from, the more collective learnings generated, and the more your discovery lake can grow and healthier this part of your lake becomes.

To clear up some concerns - I’m not arguing for doing a million tiny low hanging fruit tests by the way. Some tests are big. Very big. All companies will have to do some of those big boys. We’re not going into an optimisation hell hole, nor are we just looking for local maxima.

Growth being about incrementalism is a huge misconception. Growth is about creative, hypothesis-driven development. The key is finding the right balance between big and small tests to run, and seeing how fast and efficient you can push those out. More on prioritisation later though, let’s get back to prior tests.

In the experiment and test templates you use, there should be a section that captures interesting learnings and future ideas. If there’s not a post-test learnings section in your template at your company - add one! When you have time or if something is particularly interesting, it can be very valuable to dive into the details of others’ tests run. Secondary metrics that moved, unexpected lack of impact, where tests unexpectedly caused harm, and so on mightn’t be captured in the summary of learnings.

Alright, I’ve rambled on long enough about discovery lakes.

Insights

You’ve got a discovery lake and now you’re ready to go fishing for some good insights.

But first, what is an ‘insight’?

To me, an insight is simply an interesting fact that you can back up with data from your discovery lake that represents either:

- A potential customer value problem (and thus potential opportunity); or

- A potential business value problem (and thus potential opportunity).

I don’t want this to be theoretical, so here’s some examples:

- Site visitors on desktop convert into registered customers much more than site visitors on mobile web

- A huge portion of people that visit our marketplace are not eligible to take out any products

- People value seeing screenshots and visuals of what is ahead in their journey in order to commit

- Most of our users are buying our products because they believe we’re the luxury brand in the space

An insight must be backed with evidence - and it must represent something relevant.

The evidence can be circumstantial. You’re not in a courtroom.

It doesn’t need to be beyond a reasonable doubt. A lot of the best ideas are partial leaps of faith, but they should arise out of data.

You also don’t want to be spouting bullshit to yourself and others. You need to be able to articulate why you’re saying what you’re saying.

Spotify has found a lot of success with this in the form of ‘DIBB’. This is actually quite a similar framework to the one I follow.

In this model, the ‘Data’ would be your discovery lake, and then this is generating the ‘Insight’.

Outlining many of these should happen continuously, just like filling up your discovery lake. But especially when you get a new focus area to think about, it’s important to trawl over your data and see if there are any relevant insights you can pull out to start with.

It’s a little difficult to give you a clear structure for pulling out insights as these will vary greatly based on your circumstances.

That said, here are 3 principles for generating good insights:

- Good insights are observations that highlight a relevant potential problem or opportunity Bad insights are unrelated to the topic at hand, don’t make an observation about how things are and/or aren’t meaningful.

- Good insights have the courage to be very specific and clear, so they can be wrong Bad insights are vague enough to never be able to be proven wrong. They can be responded to with “politician’s answers” as to never be clearly wrong. Bad insights create rhetorical, vague philosophical questions that cannot be tested.

- Good insights are backed by evidence Bad insights are plucked out of the air, backed by opinions and/or heavily biased misinformation that leads you down incorrect paths. They’re heavily influenced by recency bias and use emotive language that overstates the impact.

When trawling for insights, if you keep these principles in mind then you’ll likely come up with some valuable observations. I’m still refining my system for exactly how to pull out good insights consistently, so maybe this section will change a little over time.

Careful of confirmation bias in this phase. Confirmation bias is dangerous. You can’t avoid some of it, but be aware. Try your best to avoid coming up with an insight and then go looking for the data to support it. You want insights to arise out of looking at the data, and asking yourself questions that lead you down interesting rabbit holes.

Make these into a list, and link the data that supports them inside it.

Once you’ve fished and caught a load of insights, you’ve got to choose which ones you want to take home and cook.

But how do you prioritise these? It’s too soon to consider solutions.

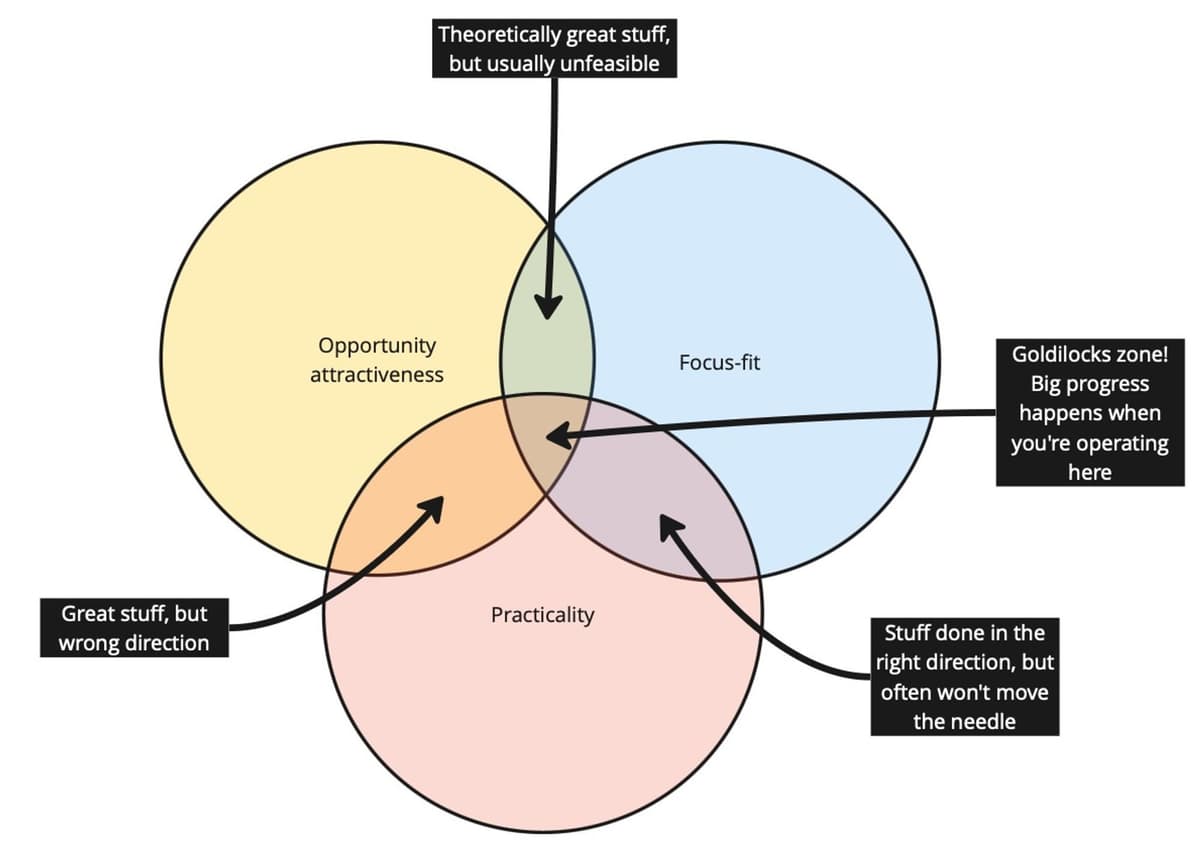

I think about insights prioritisation using the following considerations as guiding lights.

- Focus-fit - Does this insight align with the current focus for you and the team? Is it aligned strategically where the business wants to go? (i.e. not moving in the wrong direction)

- Opportunity attractiveness - Is there a lot of juice that could be squeezed if this insight is true? Is it a massive opportunity or problem? Does it have potential to be a home run hit?

- Practicality - Do we broadly have the right capabilities or talent to act on something here? Does it feel like something could actually be done here? You need to know enough engineering, design and marketing to train your gut on this one - and the better your overall discovery lake, the better you’ll get at it. You won’t have specific solutions yet, so this is on gut.

You might notice that ‘confidence’ isn’t in here. That’s because it’s too soon to be making judgements about actual uplifts. It also will bias you to playing it ‘safe’ too soon. You want to give really interesting, potentially counterintuitive ideas a chance to survive. These often have less clear data, and that impacts confidence. I’ve previously put confidence in here in some quarters as a test. It results in safe bets.

Rank and prioritise your insights based their closeness to the goldilocks zone.

Ideas

Ideas are where data and insights turn into hypotheses you can actually test in the real world.

Think of them like taking the fish you’ve caught home, and now you need to decide what to do with them. You need to find recipes to try.

I like to think of discovery as being of two parts. Problem discovery and solution discovery. We’ve now done problem discovery and are into solution discovery.

A common frustration from leaders is teams spending too long on discovery. First, that usually because continuous discovery isn’t being done. But putting that aside, in upfront discovery situations, it’s usually because the team is focusing on problem discovery for too long. To leaders, it feels a bit too abstract and it’s very difficult to see progress has been made. Sadly, it’s also where teams retreat to that when they get stuck or don’t know how to do solution discovery.

Let’s just say that if you master solution discovery, you’ll be able to convince leaders that progress is happening, and give confidence that you’re on the right track. Most higher level leaders are pragmatic and don’t take well to anything that could be construed as a waste of time.

The ideas phase is the place to be creative and sometimes counter-intuitive. It’s the place to be whacky. But it’s also the place to borrow and remix ideas and implementations from competitors, and adjacents and completely other industries.

Most successful ideas are rhymes or builds or simplifications or variations of playbooks and ideas that have existed in the past. There are very few, if any “original” ideas out there.

I’ll refrain from ranting on about it much, but it’s important to cover off a common argument that pops up here that experimentation, testing and growth is all incrementalism. This is very rarely the case. What you perceive as ‘innovation’ or the amazing impact of a ‘new design’ is riddled with hindsight bias, and is usually a build or remixing of prior ideas together with some critical thinking about the customer.

You shouldn’t be afraid to steal like an artist. There’s huge impact to be had by doing so, and in many cases it can still end up being innovation when done well.

We want to come up with large volumes of ideas about how we might solve the problems or capture the opportunities represented by your insights. Volume of ideas is key here.

You might be tempted to run an ideation session with the team here to source your ideas.

Most teams would walk into an ideation session right about now. Often some two hour session, or maybe a full day onsite, sourcing ideas from a large group.

To be honest though, sourcing your ideas from an ideation workshop is… dumb.

Before I dive into specifics about generating great ideas… let me explain why ideation workshops are one of the worst sources of ideas and what the value of running an ideation workshop actually is.

Before you burn me at the stake, let me make clear that later on I’ll talk about exactly how to get great ideas from your team. Spoiler alert: it’s not from brainstorming workshops.

Ideation Workshops

A regular frustration with ‘ideation workshops’ with your team is that you always end up with same kind of generic ideas like “show X on the homepage” or “change this CTA copy”.

In most cases, you’ll **end up with ‘average’ ideas. Maybe a couple nuggets every now and then.

The truth is there’s a huge misconception rampant out there about ideation sessions.

The main purpose of an ideation session in my view is not the actual idea generation at all.

Too many people misinterpret this, then all of a sudden the ideas that came up in a 2 hour brainstorming workshop suddenly get prioritised on an impact-effort matrix and made into the roadmap.

I think that’s crazy, and just lazy.

You can’t defer your job as a leader of coming up with great insights and defining direction onto your team from some 2 hour session that everyone dreads being at anyway.

The main purpose of an ideation session with a team in my view is to introduce (or reintroduce) them to a focus and get them engaging with the topic. It is to help them move their headspace into a new one.

The ideas that come out of this almost don’t matter. Some little nuggets might come out of a workshop (who knows!) but most of the reallllly great ideas come from elsewhere or from the team later on.

Know this going into them.

Set this expectation with your team too. Make it clear that some ideas from a workshop might be taken forward (this can help build some momentum and trust with the team of course), but that the ideas themselves from the workshop aren’t the main thing.

Ideation sessions have a ‘greasing the machine’ function more than an actual idea generation one. There’s many ways to run ideation workshops with this as a better central purpose. They take much less time. I’ve worked with great designers that have really played with different ways to get people engaged and thinking from different perspectives.

However, the bulk of great insights and ideas for tests come from patiently fishing in your discovery lake, really using your curious and mischievous and exploratory mindset. Asking yourself and having to courage to question “But what if we…?” to yourself and others.

Ask the dumb questions.

Great ideas come from the team when they’re in the right headspace and using their unique perspectives and expertise to find levers of potential growth. And when others suggest ideas to you, don’t default to why something won’t work, instead explore how it could work.

How to actually get good ideas

I really don’t want this guide to be wishy washy, hand wavy guidance though.

I’m already at risk of that.

I want to give you actionable steps to move forward if you’re stuck or unsure.

So here’s what we’re going to do.

This ultimately isn’t as good as using your own discovery lake, but I’m going to give you some stuff to kickstart you. I’m going to assume you have at least some insight into your own context. In other words, you know some stuff about your customers, your product, how the business monetises and so on.

You’re going to use this contextual information you have and apply the following methods to generate ideas yourself. I’ll give you 3 different ways you can try. When I say you, I mean literally yourself, or maybe with a maximum of 1-2 others. When others are involved, the bystander effect and groupthink are big risks. You don’t want that.

This is you going down a rabbit hole for a couple of days where you clear your calendar of all the pointless meetings you have and just focus on this.

A tip for working with others through the ideation step is that despite duplication of efforts, you should all build your own discovery lakes (feel free to share things of course). Do the deep work independently to come up with ideas without cross-pollination until you’ve each got a solid volume of ideas.

If you cross-pollinate too early, then human instinct will bias the process. You want to come with independently derived ideas then think about others’ ideas in a curious and mischievous and building way. Not in a groupthink way.

Good ideas are shy - they mostly hide when groupthink is around.

Remember, the best ideas though come from having the courage to suggest something you’re interested in to see play out. Know that many ideas will fail… in fact most ideas will fail. And that’s okay and part of the process.

Be empowered by the fact that in the best product companies in the world, only 5% to 20% of tests run are successful. They don’t run random tests just to make the success rate lower. They fail 1 in 20 to 1 in 5 times they actually believe in something enough to build it and run it.

Be bold and don’t let your inner pessimist exclude things or rule things out too early.

There are many methods to generate great ideas. Below is just three - the ones I feel are most teachable and especially useful if you don’t know where to start.

They use your discovery lake and insights you’ve generated.

I call them:

- Add/Augment/Remove

- Playbooks x Context

- The team member pathway

1. Add/Augment/Remove

Ultimately, these are the 3 things you can do with a product. You could straight up do one of them (e.g. add something), or you could do a combination at once (e.g. add something and remove something else simultaneously).

- Add - add something new

- Augment - edit, transform, reposition, reshape something that is already there

- Remove - get rid of something

In order to play get your idea juices flowing, take one of your insights, take a look at your context, and then ask the question “What could I add / augment / remove in my product to influence the insight, given my context?”.

For example, let’s use Skyscanner (a flights search engine). Some insight could be “our users say they love us because they find the cheapest flights on our platform”.

Okay, what could we add / augment / remove that helps us do this better?

We could add price indicators on our month view for selecting the date to fly.

We could change them from being traffic light highlights to specific monetary values.

We could remove providers of flights that have unethical checkout practices that have unbundled the expected services like baggage from their price in order to rank higher.

You get the picture.

Generate many, many of these.

These are testable hypotheses, and we can prioritise them.

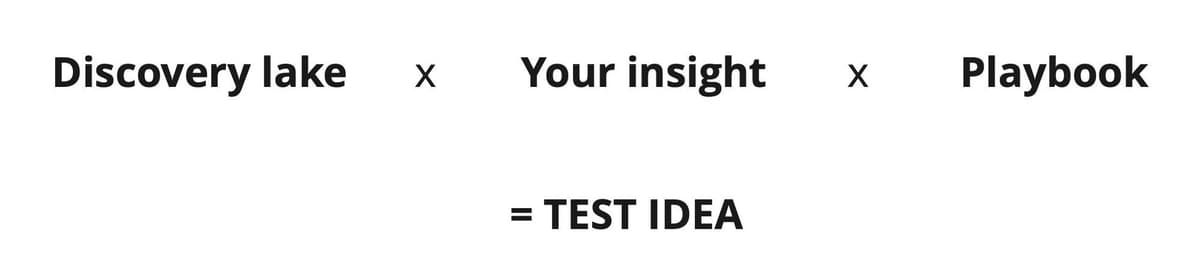

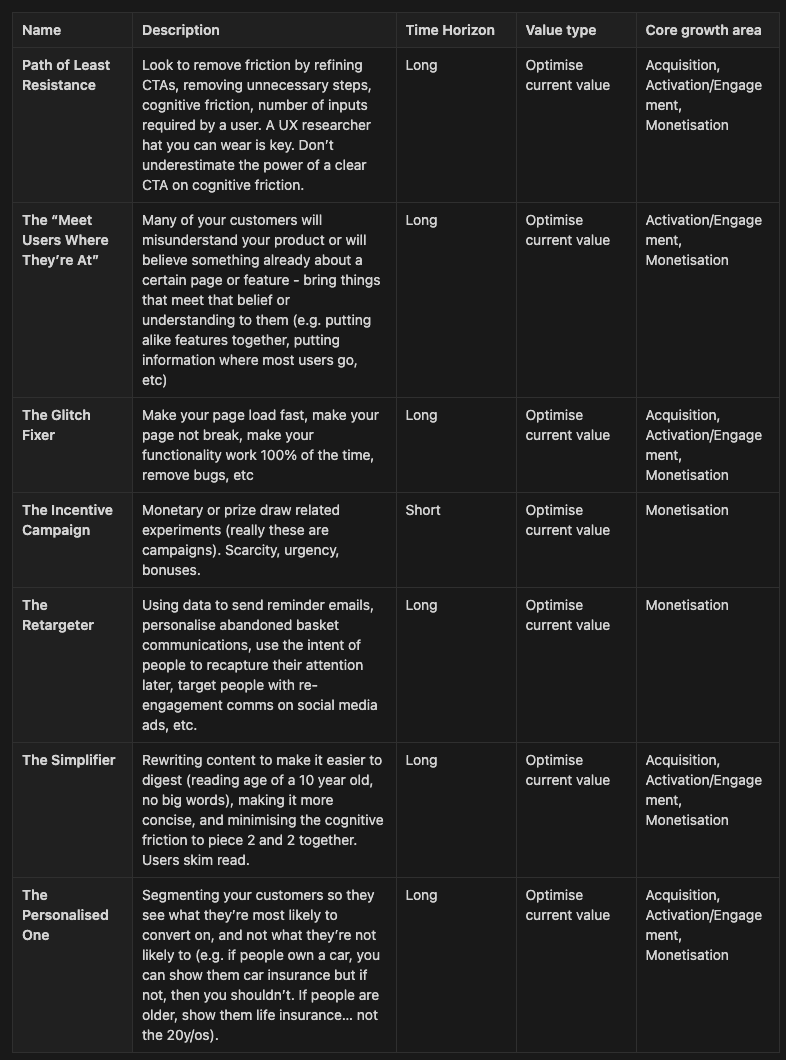

2. “Playbook remixing” - using a playbooks database and combining them with your context

A playbook is simply a time-tested formula used by yourself or that others have used with success.

Remixing is taking an insight, then looking back at your discovery lake and mixing and matching your insight against different playbooks and ways that you’ve understood other companies have found success with.

If you’re realllly struggling, then use the playbooks I’ve provided but make sure to match them up against your context and what you think might work for your customers. Some of these playbooks overlap with the Add/Augment/Remove model, but a lot of them are more specific. I’ve tried to include a load of real examples of how others have implemented them.

Use your context and your focus and apply it to the situation you’re finding yourself in.

The key, again, is to generate the volume of ideas. Good ideas almost always come from a big volume of information, not from some limited set. We’ll touch on prioritisation later.

Take your context and compound it with using the below ‘playbooks’ as a prompt for coming up with ideas for your situation. Each one encapsulates many angles, it’s more a matter of spending some time critically thinking about if it applies to your situation and thinking slightly logically (but not too logically) about if using one of these playbooks is relevant for testing in your situation.

I’ve put 7 of the playbooks I’ve personally used or seen used directly in this guide screenshot below (click to enlarge). You can get see the ever-growing list I keep of them (currently 49) by emailing me.

3. The *right* team member pathway!

Now, going back to how you get great ideas from your team - here’s how to do it well without everyone dreading another workshop.

The most amazing ideas from left field come from the team and from others. You need to build up trust through behaviour and not through just words that you will listen to these ideas and you will execute on some of them. This encourages a virtuous loop. You’ll surprise yourself with some of the results.

For example, one of my devs asked the question “What happens if we remove the user review star ratings from the results on listings on our product list page?”, with a little rationale of why they thought this might help. Sounds obvious to anyone in eCommerce or other spaces that the stars “help” customers to decide between options.

To my massive surprise… conversion went up end to end by over 2% by removing the star ratings. Odd, huh?

I won’t go into the why right here too much (TL;DR - user side eligibility restricting coverage to certain providers targeted for those lower eligibility users but don’t offer as good perks), but the point is that sometimes even the ideas that you disagree with or rule out early on can prove you wrong.

We all come with a load of biases.

The key thing you don’t want left field or completely irrelevant ideas (despite them sometimes being great ones). Put out a request for ideas of a specific flavour. In other words, share specific top level insights (like “we noticed that mobile web site visitors convert to registered users much less than desktop”) and request ideas as to why that might be the case in the form of practical things you might test.

You don’t need a formal system for submitting ideas. Don’t introduce a formal process too early, instead do stuff that doesn’t scale - let people directly hit you up with ideas. Jump on a quick call and ask them really open minded questions, truly make it clear you’ve listened and noted their idea. Maybe it won’t be implemented, but even so, they’ll feel heard and appreciated.

Make sure your team and stakeholders and others around the business become aware of who they can raise these ideas with. In the end, people like talking to people, not to forms that may or may not get read. Listen to understand, and thank them for their ideas. If something seems good, execute on it and tell them you’re doing it and tell them the results! Nothing builds better cross-organisational buy-in than you actually doing things. I strategically prioritise some of these, especially ones I believe to be less than a couple hours work to build almost purely for the stakeholder buy-in purpose. It builds trust.

Prod the team or others for their ideas. Tailor it to who they are and what you know about them. For example, if they’re an iOS engineer - ask them how you might address the insight from an iOS perspective. You can find growth levers in the specifics.

Again… volume of ideas.

Prioritisation

A healthy ideas backlog sets you up for success.

Once you’ve got some ideas, we’ve got to prioritise them somehow of course.

Prioritisation is extremely important because otherwise you’re just spraying and praying things that sound interesting. Or everything becomes a priority.

People love RICE for prioritisation. Especially in product management and growth circles.

RICE is just a method of stack ranking your ideas by giving them things that make an apples to apples comparison possible. Reach * Impact * Confidence / Effort.

I lived by this method early on.

The truth is though, it’s largely pretty dumb when you scratch at it with any rigour.

Most of the time the ideas you (or someone) wanted to come out the other side and be prioritised will come out the other side as just that.

That’s because everything that goes into making them apples to apples comparison is mostly a finger in the wind, filled with biases. It also falls apart when there’s must-do’s in the mix. When there’s different expertises required. When there’s sequencing problems. When you don’t consider your current momentum. The list goes on and on.

If I’m completely honest, reflecting back on the countless times I’ve done the process - I find that its main purpose was to justify a decision to do X instead of Y to stakeholders.

“We weighed it up against these other things and this one has the most potential”.

In a product management or growth lead interview setting, they’re mostly looking for you to use RICE or some variant of it. For high level hypothetical purposes - so be it. But in the real world, where you’re deciding real things to work on and do over other things, and dealing with complete uncertainty on impact, it’s much less useful.

Alright then smart ass, how the hell do I take my many, many ideas and make them actually usable then? How do I pick which ones to do?

The truth isn’t a comfortable one. And it’s going to sound icky at first.

But let it sit for a bit.

There’s a lot of nuance to wrap up in some simple formula.

I recognise that you might totally be thinking “geezo this dude has no idea what he’s talking about now?”. I’m not trying to be a midwit here though.

If from your deep understanding of customers it’s not obvious what sounds most valuable for your customers to prioritise, then firstly you probably don’t understand your customers enough. Your gut should be well trained about what might resonate with your customers.

If it’s still difficult to prioritise something over something else, then your goal likely isn’t clear enough.

But it’s more complicated than that.

I’ll suggest a bit of an unconventional approach to prioritisation that you might gag thinking about. The truth is that it is what I primarily use for day to day decisions though. It’s practical.

Your team are not output robots.

They’re humans.

And until we have AI that does all the work for us, humans are the best we’ve got.

We’re social and collaborative and emotional animals, we are not machines.

We thrive on successfully working together to do things of value.

So I’ve found it extremely powerful to prioritise using a less rigid and a more ‘gut-driven’ model that accounts for the humans involved.

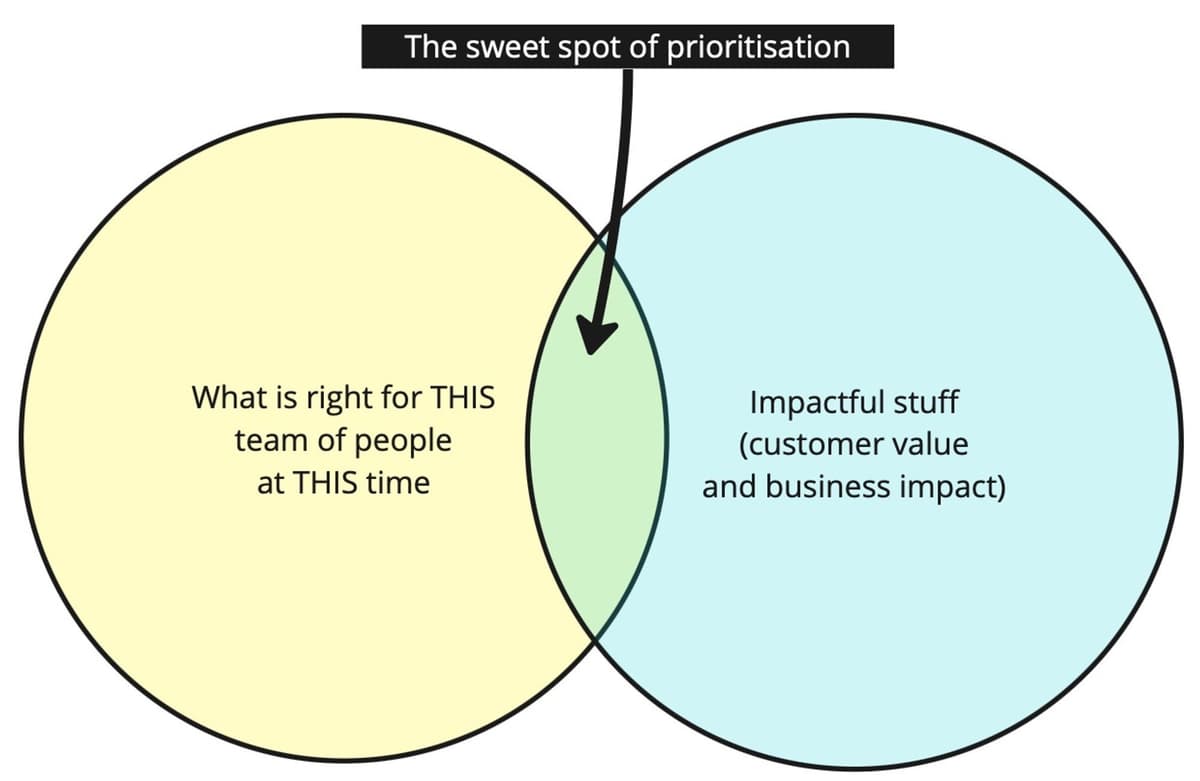

It is a balance between:

- What is right for this team of people at this time; and

- Impactful stuff.

Both are riddled with unknowns, so training your gut with experience on it is important.

Learning where the sweet spot is.

Let me explain.

1. What is right for this team of people at this time?

Your need to have your finger on the pulse of how a team is. The devs, designs, and everyone else. How they’re feeling, and how they’re working.

This cannot be measured, despite what some agile coach or scrum master might tell you. Put your story point graphs away. Actually, just put them in the bin while you’re at it.

What is right is based on a feeling. A feeling that you can only understand if you are deeply embedded within the team and have good relationships with its members.

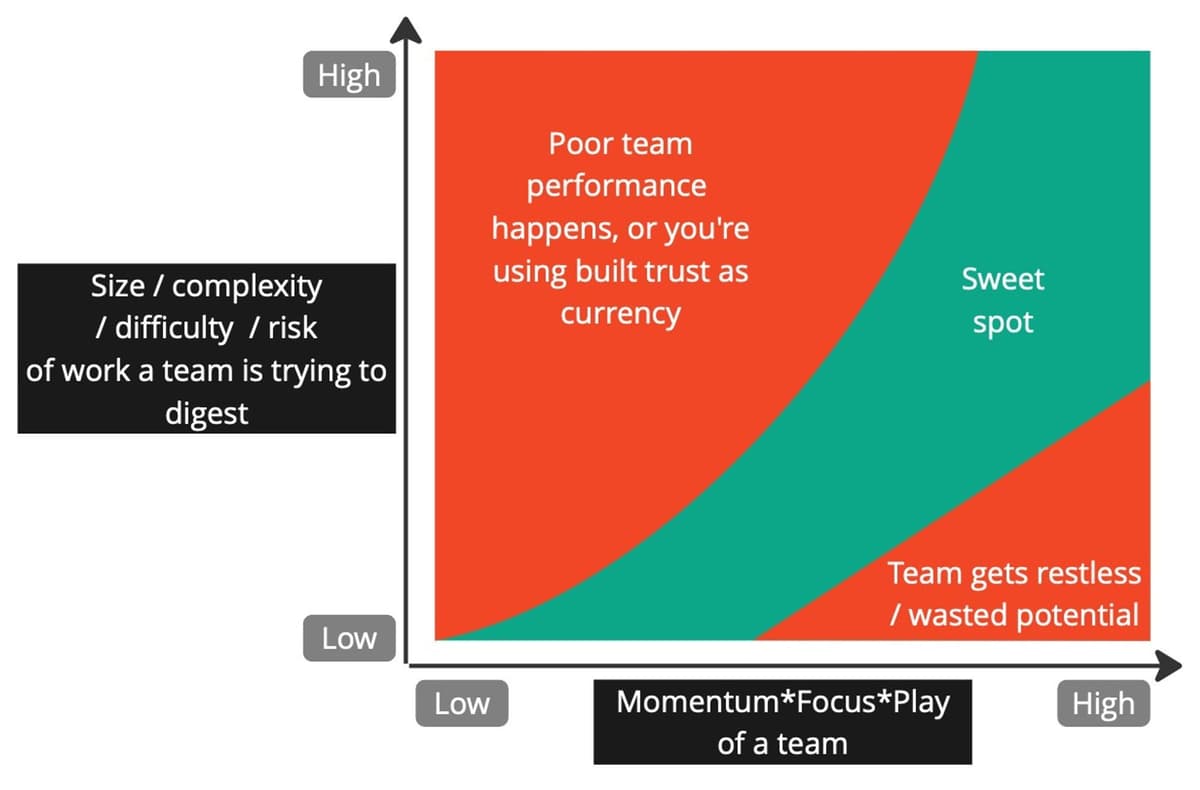

The best way that I can articulate the different ‘stages’ of feelings are what I summarise in a formula I call “Momentum * Focus * Play.” I also find it correlates with overall team productivity, or speed. The most practical and realistic way is to pass through these stages in this order too.

A team with no momentum should have “smaller”, momentum building work prioritised. Action breeds action that can then be redirected.

Teams with momentum can be focused onto more of the “right” things. The things that are a bit ‘bigger’ and that’ll more likely shift the needle. That’s giving them focus.

Then, a team can reach a state of play. A team that has play will show up motivated and deliver faster. People on the team are enjoying the work they’re doing. A team that has momentum and focus generally reaches play quite organically, as the shipping and winning stacks up… teams thrive on shipping and winning.

Play has another angle too though - this is where teams get more experimental. They can tackle greater risk - more whacky ideas. This is where the magic happens. It’s where the team feeds on their own success with greater success, and where ideas that could’ve never been conceived upfront arise.

A team with momentum, focus and play is hard to describe to someone who has never been in one. It feels like you’ve got a star in Mario Kart and you’re invincible, rapidly propelling forward and everything feels fun.

I’ve yet to cultivate a team that has momentum, focus and play that doesn’t drive massive user and business impact.

That’s not to say that sometimes there’s just important shit that needs to get done without much choice involved. That happens.

In the end though, as leaders we have to hone skills of trust, influence and incentivisation.

If you’ve built strong trust with your team, built trust becomes a currency you can cash in to get some things done that they mightn’t ordinarily be bought into or ready for.

Don’t go into a trust debt and expect no consequences.

You want to prioritise the thing that is right for the team at this point in time. Playing in this sweet spot is an accelerant for creating a high performing team.

If your team has no momentum, pick some very low difficultly hypotheses to test. If your team has high momentum and focus and play, you can afford to pick up chunky, ambitious tests.

A common mistake in prioritisation is to make it a democratic operation. That’s what RICE and other frameworks like voting on something are able to do quite well.

But it pains me to say that prioritisation by committee almost never works. It’s nice in theory, but horrible in practice and for real impact. This is because compromise leads to wearing one brown shoe and one black shoe out to the school dance, like a fool.

Someone on the team is the growth lead. They are in the best place to understand the context, the focus, the prior tests, the team dynamics, the various internal and external pressures in the team, listen to the team, and so on. They need to build trust with the team they’ll make a good, or at least well considered call.

They have to make the ultimate decision and convince everyone else to back that decision. Sometimes that’ll use trust built as currency, but if you’re in the sweet spot it costs nothing.

I have more thoughts on building what I call a ‘rally car’ team - but that’s probably something for another time.

2. Impactful stuff

First, what is in your control is if certain ideas are attempting to point in the direction of your stated focus (problem to solve). This is the most important and easiest filter to apply.

For example, you likely wouldn’t do a monetisation test on the pricing page if your stated focus is to improve activation of your product post-sale.

That’s not rocket science.

Regarding size of impact though, the picture gets a bit more complicated.

The truth with RICE and other frameworks is that no one knows how impactful stuff will be. We can make educated guesses and delude ourselves with impact forecasts, but at some point of experience you realise that you just don’t know. The smallest changes can have big impact, and the biggest changes be flops.

Good experimentation and testing is used a tool of humility. It’s about doing what we believe in, but being humble enough to know that we’re not sure what will work.

“Design it like you’re right. Test it like you’re wrong.” is what one of my mentors drilled into my brain.

Opportunity sizing and uplift potential is an art more than a science. Eye up the size of the prize, but don’t get confident until you have the data from actually running the test. In predicting the size of an opportunity, be data-informed. Not data-led or data-driven. Make decisions combining the data you have, alongside your gut.

It sounds like huge cop out, but it’s the truth behind why most tests that turn out to be amazingly impactful get run. They simply wouldn’t be prioritised on the hard data alone.

Some people like to claim they’re messiahs that knew by analysing the data exactly what to do to capture some prize. Maybe those people exist. I’ve yet to meet one that hits any more consistently than one that combines the hard data with the glued together soft data in their head and the feel in their gut.

Being data-informed and training your gut will empower you to run tests you otherwise wouldn’t have prioritised.

You train your gut by being extremely close to your users, the business, the product, the industry, and more. This is why the discovery lake isn’t some performative exercise. It’s a continuous habit of bathing in the context and being a true domain expert in your users and business. This is the true product manager role, but in a product manager’s absence, whoever the growth lead is must take this on.

By practicing finding the sweet spot between what is right for this team and this time and what could be impactful, you will hone your skills. It’s like training to run a marathon. What the data tells you about how you can approach the training, combined with what your body is telling you.

From here, is where you’ll take items from the top of your prioritised list and set up a good test to actually build and run.

But I’m calling it here, for now

Ooft, that’s been a long read.

Like 10,000 words long.

Maybe you just skimmed it.

Regardless, if you made it here - nice work.

You’ve read my first pass at practically thinking about and doing product discovery, especially in growth contexts.

From this guide you should now:

- Understand the idea and value of continuous discovery and know how to build your own discovery lake

- Be able to generate insights from your lake

- Have frameworks for coming up with a whole load of ideas based on your insights, and

- Know how to prioritise those ideas based on your knowledge of your product and customers and where your team is.

Next, you’ll want to look at how to ensure you’re designing good tests of the ideas you’ve prioritised, but that’s a topic I’ll have to write about another day.

In the meantime, if you want a great book for running good tests, pick up this one called Trustworthy Online Controlled Experiments on Amazon or wherever. It’s mainly focused on A/B testing, but this kind of test is the bread and butter of the top right quadrant where you are - so it’s a good next step.